VPEC-T stands for Values, Policies, Events, Content and Trust. Green and Bate propose this as a framework for thinking about activities involving the exploitation of information in companies:

- Value represents what the company, the users, everyone involved (and that turns out to be important as we'll see later) is looking to gain from an information system.

- Policies are controls that limit how the information is handled. The policies may have internal or external origins.

- Events are things that happen in a business that trigger a chain of actions that lead to the business serving its customers, collecting its income, managing its staff and generally meeting its obligations.

- Content comprises information in any form of message that flows around the business and, where appropriate, outside it.

- Trust is something that can can be an issue almost anywhere, and is proposed by Green and Bate to be considered throughout the investigation and design of an information system.

You know the things - put them on the front of a camera lens and you get five different views of the world. Not a bad analogy: Consciously looking at a planned information system through this lens first, provides views from five different directions. If you're familiar with de Bono's Six Thinking Hats, this could be seen as a similar but more specialized thinking tool.

Considering Values, Policies and Events means that information systems practitioners will have to think about the business, not information technology (yet). The Content considered is not just the data in computer systems, but all the information flowing around. Some examples:

- an email about a customer order that seems out of line with past ones;

- an instant message asking for a memory jog on a customer's name;

- notes from one shift to the next, left on a whiteboard;

- phone calls asking for a physical stock check;

- SMS messages to arrange a delivery.

And the issue of Trust is not where, perhaps, you might expect. This is not about trust between the company and customers - a bank and someone who purports to be an account holder, for example. Designers of information systems are used to having to deal with that type of trust, even if they are not always able to get it right. This kind of trust belongs under Policies. The bank has a policy on how those accessing a bank account through an on-line system must identify themselves.

“Trust” in the context of a VPEC-T study is different - it is about the hidden, or sometimes open, relationship between any two groups involved in handling the company's information. It can even be about the lack of such a relationship. For example, between front-line staff who represent the business and IT people who developed an maintain the computer system used to handle customer enquiries.

I have often seen manual systems kept as a backup because computer users just didn't trust the system. I remember front-desk staff in a large hotel who had their own 3 x 5 cards of past-guest information; insurance adjusters with separately-maintained spreadsheets on their own PCs duplicating information in the main IT system; and order takers with a small forest of yellow tags for prices of most popular products stuck on a notice-board.

Shadow IT: Green and Bate refer to these alternative systems as ‘Shadow IT’ and point out that web-based applications available publicly - Web 2.0; Software as a Service - make it much easier to establish shadow systems. Sometimes it's a matter of speed: Those post-it tags, for example. But in all these cases, and many similar ones I've seen, the information was held in the computer but they did not trust it, or “just wanted to check”. |  |

Sometimes, the lack of trust was based on real events rather than perceptions, but recognizing this and dealing with it openly hardly ever happened. I have struggled to think of a single case where user's and IT people sat round a table while someone said “You don't trust this system to deliver reliable and accurate information. What steps do we need to take to make you feel safe with the data and comfortable with the new system?” I have heard arguments (sorry, ‘robust discussions’) where “we don't trust the system” was the underlying message, but raised voices on both sides and innuendo flying back and forth were more an exercise in venting frustration than a help in resolving this kind of problem.

This absence of trust may be justified, or it may have been justified once, but manual backup systems often just take on a life of their own, no one having said ‘stop’ when the IT system stabilized. Lack of trust can then become frozen into the culture of a user department: new staff are inducted into the same way of thinking, long after any evidence of unreliability has disappeared.

The T in VPEC-T brings this out into the open (for the first time that I've seen) and says that we have to be looking constantly for evidence of lack of trust, discussing it openly and dealing with it, if information systems are to succeed.

It's easy to see that this could be a challenge to bring off successfully, and Green and Bate have no illusions about this being a magic bullet. In fact, they point out that it's probably one of the hardest topics to broach and deal with constructively, but it is something we have all known subconsciously. No one has talked about before. If it is routinely considered and dealt with, there is at least a good possibility that it can be removed as one of the obstacles to successful implementation of systems.

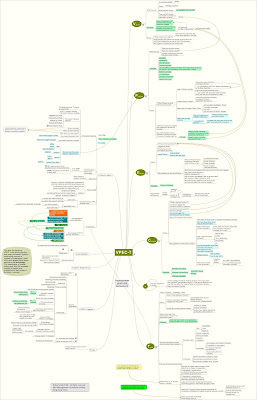

Click on the picture to view the larger version of VPEC-T mindmap

Courtesy: Informationtamers